- Entropy (from Dr. Greek. Ἐντροπία "Rotate", "Transformation") - the term widely used in natural and accurate sciences. It was first introduced within the framework of thermodynamics as a function of the state of the thermodynamic system, which determines the measure of irreversible dispersion of energy. In statistical physics, entropy characterizes the likelihood of any macroscopic state. In addition to physics, the term is widely used in mathematics: information theory and mathematical statistics.

Entropy can be interpreted as a measure of uncertainty (disorder) of some system, for example, any experience (test), which can have different outcomes, which means that the amount of information. Thus, another interpretation of entropy is the information container system. With this interpretation, the fact that the creator of the concept of entropy in the theory of information (Claude Shannon) was first wanted to name this amount of information.

The concept of information entropy is used both in the theory of information and mathematical statistics and in statistical physics (Gibbs entropy and its simplified version - Boltzmann entropy). The mathematical meaning of information entropy is the logarithm of the available states of the system (the base of the logarithm may be different, it determines the unit of measurement of entropy). This function on the number of states provides the property of entropy additivity for independent systems. Moreover, if states differ according to the degree of availability (i.e. it is not equal to), under the number of state states, it is necessary to understand their effective amount, which is defined as follows. Let the state of the system are equally and have probability

(\\ DisplayStyle P)

Then the number of states

(\\ DisplayStyle N \u003d 1 / P)

(\\ displaystyle \\ log n \u003d \\ log (1 / p))

In case of different probabilities of states

(\\ DisplayStyle P_ (I))

Consider the weighted average value

(\\ displaystyle \\ log (\\ Overline (N)) \u003d \\ Sum _ (i \u003d 1) ^ (n) p_ (i) \\ log (1 / p_ (i)))

(\\ DisplayStyle (\\ OVERLINE (N)))

Effective number of states. From this interpretation directly implies the expression for the information entropy of Shannon

(\\ displaystyle h \u003d \\ log (\\ Overline (n)) \u003d - \\ Sum _ (i \u003d 1) ^ (n) p_ (i) \\ log p_ (i))

Such an interpretation is also valid for the entropy of the Reni, which is one of the generalizations of the concept of information entropy, but in this case the effective number of system states is determined (it can be shown that the entropy of the Reni corresponds to an effective number of states determined as a mean power-weighted with a parameter

(\\ DisplayStyle Q \\ LEQ 1)

Entropy (from Dr. Greek. Ἐντροπία "Rotate", "Transformation") - the term widely used in natural and accurate sciences. It was first introduced within the framework of thermodynamics as a function of the state of the thermodynamic system, which determines the measure of irreversible dispersion of energy. In statistical physics, entropy characterizes the likelihood of any macroscopic state. In addition to physics, the term is widely used in mathematics: information theory and mathematical statistics.

In science, this concept entered in the XIX century. Initially, it was applied to the theory of thermal machines, but quite quickly appeared in the rest of the physics areas, especially in the theory of radiation. Very soon entropy began to be used in cosmology, biology, in theory of information. Different areas of knowledge allocate different types of chaos measure:

- information;

- thermodynamic;

- differential;

- cultural and others.

For example, for molecular systems there is a boltzmann entropy that determines the measure of their chaoticness and homogeneity. Bolzman managed to establish the relationship between the chaos measure and the probability of a state. For thermodynamics, this concept is considered a measure of irreversible energy scattering. This is the function of the state of the thermodynamic system. In a separate system, entropy is growing to maximum values, and they eventually become an equilibrium state. Entropy information implies some measure of uncertainty or unpredictability.

Entropy can be interpreted as a measure of uncertainty (disorder) of some system, for example, any experience (test), which can have different outcomes, which means that the amount of information. Thus, another interpretation of entropy is the information container system. With this interpretation, the fact that the creator of the concept of entropy in the theory of information (Claude Shannon) was first wanted to name this amount of information.

For reversible (equilibrium) processes, the following mathematical equality is carried out (a consequence of the so-called equality of clauses), where - the heat supplied, the temperature, and states, and the entropy corresponding to these states (the process of transition from the state to the state) is considered here.

For irreversible processes, an inequality flows from the so-called inequality of Clausius, where - the suspended heat is the temperature, and states, and the entropy corresponding to these states.

Therefore, entropy adiabatically isolated (no supply or heat removal) of the system with irreversible processes can only increase.

Using the concept of entropy Clausius (1876) gave the most general formulation of the 2nd start of thermodynamics: with real (irreversible) adiabatic processes, entropy increases, reaching the maximum value in the equilibrium state (2nd the beginning of thermodynamics is not absolute, it is broken during fluctuations).

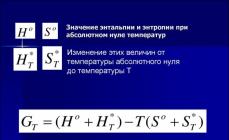

Absolute entropy (s) substance or process - This is a change in the available energy during heat transfer at a given temperature (BTU / R, J / K). Mathematically entropy is equal to heat transfer, divided into absolute temperature at which the process takes place. Consequently, the transmission processes of a large amount of warmth increase the entropy. Also, entropy changes will increase when heat transfer at low temperatures. Since absolute entropy concerns the suitability of the entire energy of the universe, the temperature is usually measured in absolute units (R, K).

Specific entropy (S) Measure relative to the mass of the mass of the substance. Temperature units that are used in calculating the differences of entropy of states are often given with temperature units in Fahrenheit or Celsius degrees. Since differences in degrees between Fahrenheit and Renkin or Celsius scales and Celvin are equal, the solution in such equations will be correct regardless of whether entropy is expressed in absolute or ordinary units. Entropy has the same temperature as this enthalpy of a certain substance.

We summarize: entropy increases, therefore, by any other acts we increase chaos.

Just about the difficult

Entropy - measure of disorder (and characteristic of the condition). Visually, the more evenly things are located in some space, the more entropy. If sugar lies in a cup of tea in the form of a piece, the entropy of this state is small, if dissolved and distributed over the whole volume - great. The mess can be measured, for example, by considering how many ways it is possible to decompose objects in a given space (entropy then proportional to the logarithm of the number of layouts). If all socks are folded extremely compact one stack on the shelf in the closet, the number of layout options is little and is reduced only to the number of rearrangements of the socks in the stack. If the socks can be in an arbitrary location in the room, then there is a unthinkable number of ways to decompose them, and these layouts are not repeated during our life, like the shape of snowflakes. The entropy of the state of "socks scattered" is huge.

The second law of thermodynamics states that the entropy does not decrease in a closed system (usually it increases). Under its influence, the smoke is dissipated, sugar dissolves, scattered with the time of stones and socks. This trend is explained simply: things move (moved by us or by the forces of nature) is usually under the influence of random impulses that have no common goal. If the impulses are random, everything will move from order to the mess, because the ways to achieve disorder is always greater. Imagine a chessboard: the king can get out of an angle in three ways, all the ways possible for it are from the angle, and come back into the angle from each neighboring cell - only in one way, and this course will be only one of 5 or from 8 possible moves. If you deprive his goals and allow moving randomly, he in the end with an equal probability can be in any place of a chessboard, entropy will be higher.

In a gas or liquid, the role of such disordering power plays a thermal movement, in your room - your shortened desires to go there, here, to be sought, work, etc. What these desires do not matter, the main thing is that they are not related to cleaning and are not connected with each other. To reduce entropy, you need to expose the system to external influence and make work over it. For example, according to the second law, entropy in the room will continuously increase, until the mother goes and does not ask you a slightly to gain. The need to do the work means also that any system will resist the reduction of entropy and guidance. In the Universe, the same story - entropy as started to increase with a large explosion, so will grow until mom comes.

Mea Chaos in the Universe

For the universe, the classic embodiment of entropy cannot be applied, because it is active in it, the gravitational forces are active, and the substance itself cannot form a closed system. In fact, for the Universe is a measure of chaos.

The main and largest source of disorder, which is observed in our world, are considered to be all known massive education - black holes, massive and supermassive.

Attempts to accurately calculate the value of Chaos Measure can not be called successful, although they occur constantly. But all the estimates of the entropy of the Universe have a significant variation in the values \u200b\u200bobtained - from one to three orders. This is explained not only by the lack of knowledge. There is no insufficiency of information about the effects on the calculations not only of all known celestial objects, but also of dark energy. The study of its properties and features is still in the ensuing, and the effect may be decisive. Mera chaos universe changes all the time. Scientists constantly conduct certain studies to obtain the possibility of determining common patterns. Then it will be possible to make quite sure forecasts for the existence of various space objects.

Thermal death of the universe

Any closed thermodynamic system has a finite state. The universe is also no exception. When the directional exchange of all types of energies stops, they are rejected into thermal energy. The system will switch to a state of thermal death if the thermodynamic entropy will receive the highest value. The conclusion about such late our world was formulated by R. Clausius in 1865. He took the second law of thermodynamics as a basis. According to this law, a system that is not exchanged with energies with other systems will look for an equilibrium state. And it may well have parameters characteristic of the thermal death of the universe. But Clausius did not take into account the influence of gravity. That is, for the universe, in contrast to the system of perfect gas, where the particles are distributed in some volume evenly, the homogeneity of the particles cannot correspond to the greatest value of entropy. Nevertheless, it is not clear to the end, entropy is the permissible measure of chaos or the death of the universe?

Entropy in our lives

In the peak of the second beginning of thermodynamics, according to the provisions of which everything should develop from difficult to simple, the development of earth evolution is moving in the opposite direction. This inconsistency is due to thermodynamics of processes that are irreversible. Consumption by a living organism, if it is to imagine as an open thermodynamic system, occurs in smaller volumes, rather than ejected from it.

Foodstuffs have less entropy than produced products produced from them. That is, the body is alive, because it can throw away this measure of chaos, which is produced in it due to the flow of irreversible processes. For example, by evaporation from the body, about 170 g of water is derived, i.e. The human body compensates for the decrease in entropy with some chemical and physical processes.

Entropy is a certain measure of the free state of the system. It is the more fuller than the smaller limitations this system has, but provided that there is a lot of degrees of freedom. It turns out that the zero value of the measures of chaos is complete information, and the maximum is absolute ignorance.

Our whole life is solid entropy, because the chaos measure sometimes exceeds the measure of common sense. Perhaps not so far away when we come to the second beginning of thermodynamics, because sometimes it seems that the development of some people, and entire states, has already gone reversed, that is, from complex to primitive.

conclusions

Entropy - the designation of the function of the state of the physical system, the increase in which is carried out due to the reversible (reversible) heat supply to the system;

the value of internal energy that cannot be transformed into mechanical work;

the exact definition of entropy is made by means of mathematical calculations, with which the corresponding state parameter (thermodynamic property) of the associated energy is installed for each system. The most clear entropy is manifested in thermodynamic processes, where the processes, reversible and irreversible, and in the first case, the entropy remains unchanged, and in the second it is constantly growing, and this increase is carried out by reducing mechanical energy.

Consequently, all that many irreversible processes that occur in nature are accompanied by a decrease in mechanical energy, which ultimately should lead to a stop, to "thermal death". But this cannot happen, because from the point of view of cosmology it is impossible to complete the empirical knowledge of all the "integrity of the Universe", on the basis of which our idea of \u200b\u200bentropy could find sound applications. Christian theologians believe that, based on entropy, it can be concluded about the limb of the world and use it to evict the "existence of God." In cybernetics, the word "entropy" is used in a sense, different from its direct value, which can only formally derive from a classic concept; It means: medium fullness of information; Inscript regarding the value of the "expectations" of information.

see also "Physical Portal" Entropy can be interpreted as a measure of uncertainty (disorder) of some system, for example, any experience (test), which can have different outcomes, which means that the amount of information. Thus, another interpretation of entropy is the information container system. With this interpretation, the fact that the creator of the concept of entropy in the theory of information (Claude Shannon) was first wanted to call this value information.

H \u003d log \u2061 n ¯ \u003d - Σ i \u003d 1 n p i log \u2061 p i. (\\ DisplayStyle H \u003d \\ Log (\\ Overline (N)) \u003d - \\ Sum _ (i \u003d 1) ^ (n) p_ (i) \\ log p_ (i).)Such an interpretation is also valid for the entropy of the Reni, which is one of the generalizations of the concept of information entropy, but in this case the effective number of system states is determined (it can be shown that the entropy of the Reni corresponds to an effective number of states determined as a mean power-weighted with a parameter Q ≤ 1 (\\ DisplayStyle Q \\ LEQ 1) from magnitude 1 / P i (\\ DisplayStyle 1 / P_ (I))) .

It should be noted that the interpretation of the Shannon formula based on a weighted average is not an rationale. The strict output of this formula can be obtained from combinatorial considerations using the asymptotic styling formula and is that the distribution combinatoriality (that is, the number of methods that it can be implemented) after taking logarithm and normalization in the limit coincides with the expression for entropy in the form, proposed by Shannon.

In a broad sense, in which word is often used in everyday life, entropy means the measure of disorder or chaotic system: the smaller the elements of the system are subject to any order, the higher the entropy.

1 . Let some system can stay in each of N (\\ DisplayStyle N) Available conditions with probability P i (\\ DisplayStyle P_ (I))where i \u003d 1 ,. . . , N (\\ displaystyle i \u003d 1, ..., n). Entropy H (\\ DisplayStyle H) is the function of only probabilities P \u003d (p 1,.., P n) (\\ displaystyle p \u003d (p_ (1), ..., p_ (n))): H \u003d H (P) (\\ DISPLAYSTYLE H \u003d H (P)). 2 . For any system P (\\ DisplayStyle P) Fair H (P) ≤ H (P U N i F) (\\ DisplayStyle H (P) \\ LEQ H (P_ (UNIF)))where P U N i F (\\ DisplayStyle P_ (UNIF)) - System with uniform probability distribution: P 1 \u003d P 2 \u003d. . . \u003d p n \u003d 1 / n (\\ displaystyle p_ (1) \u003d p_ (2) \u003d ... \u003d p_ (n) \u003d 1 / n). 3 . Add to the system condition p n + 1 \u003d 0 (\\ displaystyle p_ (n + 1) \u003d 0)The entropy system will not change. 4 . Entropy of the combination of two systems P (\\ DisplayStyle P) and Q (\\ DisplayStyle Q) Has appearance H (p q) \u003d h (p) + h (q / p) (\\ displaystyle h (pq) \u003d h (p) + h (q / p))where H (q / p) (\\ displaystyle h (q / p)) - average by ensemble P (\\ DisplayStyle P) Conditional entropy Q (\\ DisplayStyle Q).The specified axiom set uniquely leads to the formula for the Entropy of Shannon.

Different disciplines

- Thermodynamic entropy is a thermodynamic function that characterizes the measure of irreversible energy dissipation in it.

- In statistical physics, it characterizes the likelihood of a certain macroscopic state of the system.

- In mathematical statistics - measure of the uncertainty of probability distribution.

- Information entropy - in the theory of information of the measure of the uncertainty of the source of messages, determined by the probabilities of the appearance of certain characters when they are transmitted.

- The entropy of the dynamic system - in the theory of dynamic systems of the measure of chaoticness in the behavior of the system trajectories.

- Differential entropy is a formal generalization of the concept of entropy for continuous distributions.

- Reflection entropy is part of the discrete system information that is not reproduced when the system is reflected through the totality of its parts.

- Entropy in the theory of control is a measure of the uncertainty of the state or behavior of the system in these conditions.

In thermodynamics

The concept of entropy was first introduced by Clausius in thermodynamics in 1865 to determine the measure of irreversible dispersion of energy, the measures of rejection of the real process from the ideal. Defined as the sum of the resulting heat, it is a function of the state and remains constant with closed reversible processes, while in irreversible - its change is always positive.

Mathematically entropy is defined as a system status function defined with an accuracy of an arbitrary constant. The difference of entropy in two equilibrium states 1 and 2, by definition, is equal to the reduced amount of heat ( Δ Q / T (\\ DisplayStyle \\ Delta Q / T)), which must be reported to the system to translate it from state 1 to state 2 for any quasistatic path:

Δ s 1 → 2 \u003d s 2 - s 1 \u003d ∫ 1 → 2 Δ qt (\\ displaystyle \\ delta s_ (1 \\ to 2) \u003d s_ (2) -s_ (1) \u003d \\ int \\ limits _ (1 \\ to 2) (\\ FRAC (\\ Delta Q) (T))). (1) Since entropy is defined up to an arbitrary constant, then it is possible to conditionally take the condition 1 for the initial and put S 1 \u003d 0 (\\ DisplayStyle S_ (1) \u003d 0). Then

S \u003d ∫ Δ q t (\\ displaystyle s \u003d \\ int (\\ FRAC (\\ Delta Q) (T))), (2.) Here the integral is taken for an arbitrary quasistatic process. Differential function S (\\ DisplayStyle S) Has appearance

D s \u003d Δ q t (\\ displaystyle ds \u003d (\\ FRAC (\\ Delta Q) (T))). (3) Entropy establishes a link between macro and micro states. The feature of this characteristic is that this is the only function in physics that shows the direction of processes. Since entropy is a function function, it does not depend on how the transition from one system state to another is carried out, but is determined only by the initial and end states of the system.

ENTROPY

ENTROPY

(from Greek. Entropia - Rotate)

part of the internal energy of a closed system or the energy set of the universe, which cannot be used, in particular, cannot go or be transformed into mechanical work. Exact entropy is performed using mathematical calculations. The most distinct effect of entropy is visible on the example of thermodynamic processes. So, never completely goes into mechanical work, converting into other types of energy. It is noteworthy that in reversible processes, the value of entropy remains unchanged, with irreversible, on the contrary, it is steadily increasing, and this increase occurs due to a decrease in mechanical energy. Consequently, all the irreversible processes that occur in nature are accompanied by a decrease in mechanical energy, which ultimately should lead to universal paralysis, or, speaking otherwise, "thermal death". But such is competent only in the case of postulating the totalitarianity of the universe as a closed empirical data. Christ. Theologies based on entropy, talked about the limb of the world, using it as the existence of God.

Philosophical Encyclopedic Dictionary. 2010 .

ENTROPY

(Greek ἐντροπία - turn, transformation) - The state of thermodynamic. Systems characterizing the direction of flow of spontaneous processes in this system and is a measure of their irreversibility. The concept of E. was introduced in 1865 R. Clausius to characterize the processes of energy conversion; In 1877 L. Bolzman gave him statistical. interpretation. With the help of the concept of E. The second beginning of the thermodynamics is formulated: E. The thermally insulated system is always only increasing, i.e. Such, granted to itself, strives for thermal equilibrium, with Kr. E. Maximum. In statistical. Physics E. expresses the uncertainty of microscopic. System status: the more microscopic. The state states correspond to this macroscopic. The state is the higher the thermodynamic. And E. last. The system with an unlikely structure provided by itself develops towards the most likely structure, i.e. In the direction of E. This, however, applies only to closed systems, so E. cannot be used to substantiate the thermal death of the universe. In the theory and n f about r and c and and E. is considered as a lack of information in the system. In cybernetics, with the help of the concepts of E. and Negentropy (denial. Entropy) express the measure of the organization of the system. Being fair in relation to systems that obey statistical. Laws, this measure, however, requires great caution when transferring to biological, language and social systems.

LIT: Shambadal P., Development and application of the concept of E., [per. p.], M., 1967; Pier J., Symbols, Signals, Noise, [Per. from English], M., 1967.

L. Fetkin. Moscow.

Philosophical encyclopedia. In 5 tons - M.: Soviet Encyclopedia. Edited by F. V. Konstantinova. 1960-1970 .

Synonyms:Watch what is "entropy" in other dictionaries:

- (from Greek. Entropia turn, transformation), the concept for the first time entered in thermodynamics to determine the measure of irreversible scattering of energy. E. widely applied in other areas of science: in statistical physics as a measure of the probability of implementation to. ... ... Physical encyclopedia

Entropy, an indicator of chance or disorder of the structure of the physical system. In thermodynamics, entropy expresses the amount of thermal energy suitable for the performance of work: the energy is less, the higher the entropy. In the scale of the universe ... ... Scientific and Technical Encyclopedic Dictionary

Measure of the internal disorder of the information system. Entropy increases with the chaotic distribution of information resources and decreases with their ordering. In English: Entropy See also: Information Financial Dictionary Finam ... Financial vocabulary

- [English. Entropy Russian Language Foreign Words

Entropy - Entropy ♦ Entropie The property of an isolated (or adopted) physical system characterized by the amount of spontaneous change to which it is capable of. The entropy system reaches a maximum when it is completely ... Philosophical Dictionary Sponville

- (from Greek. Entropia turn turning) (usually denotes S), the function of the state of the thermodynamic system, the change of which DS in the equilibrium process is equal to the ratio of the amount of heat DQ, reported by the system or allotted from it, to ... ... Big Encyclopedic Dictionary

Disorder, disorder of the dictionary of Russian synonyms. Entropy SUT., Number of synonyms: 2 Disorder (127) ... Synonym dictionary

ENTROPY - (from Greek. EN in, inside and trope turn, transformation), the value characterizing the measure of the associated energy (D s), which in the isothermal process cannot be turned into operation. It is determined by the logarithm of thermodynamic probability and ... ... Ecological Dictionary

entropy - And, g. Entropie f., it. Entropie c. EN in, inside + trope turn, transformation. 1. The physical quantity characterizing the thermal state of the body or system of bodies and possible changes in these states. Calculation of entropy. Bass 1. || ... ... Historical Dictionary of Gallicalism Russian Language

ENTROPY - entropy, the concept administered in thermodynamics and, as if a measure of the irreversibility of the process, the measure of the energy transition in such a form, from which it cannot spontaneously go to other forms. All imaginable processes occurring in any system ... ... Big medical encyclopedia

Books

- Statistical mechanics. Entropy, order parameters, theory of complexity, James P. Seat. Tutorial "Statistical mechanics: entropy, parameters of order and complexity", written by Professor of Cornell University (USA) James One and was published for the first time in English in 2006 ...

Entropy is the value that characterizes the degree of disordex, as well as the thermal state of the universe. The Greeks determined this concept as a transformation or coup. But in astronomy and physics, its meaning is somewhat excellent. In simple language, entropy is a measure of chaos.

Views

In science, this concept entered in the XIX century. Initially, it was applied to the theory of thermal machines, but quite quickly appeared in the rest of the physics areas, especially in the theory of radiation. Very soon entropy began to be used in cosmology, biology, in theory of information. Different areas of knowledge allocate different types of chaos measure:

- information

- thermodynamic

- differential

- cultural

For example, for molecular systems there is a boltzmann entropy that determines the measure of their chaoticness and homogeneity. Bolzman managed to establish the relationship between the chaos measure and the probability of a state. For thermodynamics, this concept is considered a measure of irreversible energy scattering. This is the function of the state of the thermodynamic system.

In a separate system, entropy is growing to maximum values, and they eventually become an equilibrium state.

Entropy information implies some measure of uncertainty or unpredictability.

Entropy of the Universe

For the universe, the classic embodiment of entropy cannot be applied, because it is active in it, the gravitational forces are active, and the substance itself cannot form a closed system. In fact, for the Universe, it is a measure of chaos. Purchases accurately calculate the value of chaos. But all the estimates of the entropy of the Universe have a significant variation in the values \u200b\u200bobtained - from one to three orders. This is explained not only by the lack of knowledge. There is no insufficiency of information about the effects on the calculations not only of all known celestial objects, but also of dark energy. The study of its properties and features is still in the ensuing, and the effect may be decisive. Mera chaos universe changes all the time.

Thermal death of the universe

Any closed thermodynamic system has a finite state.The universe is also no exception. When the directional exchange of all types of energies stops, they are rejected into thermal energy. The system will switch to a state of thermal death if the thermodynamic entropy will receive the highest value. The conclusion about such late our world was formulated by R. Clausius in 1865. He took the second law of thermodynamics as a basis. According to this law, a system that is not exchanged with energies with other systems will look for an equilibrium state. And it may well have parameters characteristic of the thermal death of the universe. But Clausius did not take into account the influence of gravity. That is, for the universe, in contrast to the system of perfect gas, where the particles are distributed in some volume evenly, the homogeneity of the particles cannot correspond to the greatest value of entropy. Nevertheless, it is not clear to the end, entropy is the permissible measure of chaos or the death of the universe?

In our life

In the peak of the second beginning of thermodynamics, according to the provisions of which everything should develop from difficult to simple, the development of earth evolution is moving in the opposite direction. This inconsistency is due to thermodynamics of processes that are irreversible. Consumption by a living organism, if it is to imagine as an open thermodynamic system, occurs in smaller volumes, rather than ejected from it.

Foodstuffs have less entropy than produced products produced from them.

That is, the body is alive, because it can throw away this measure of chaos, which is produced in it due to the flow of irreversible processes. For example, by evaporation from the body, about 170 g of water is derived, i.e. The human body compensates for the decrease in entropy with some chemical and physical processes.

Entropy is a certain measure of the free state of the system. It is the more fuller than the smaller limitations this system has, but provided that there is a lot of degrees of freedom. It turns out that the zero value of the measures of chaos is complete information, and the maximum is absolute ignorance.

Our whole life is solid entropy, because the chaos measure sometimes exceeds the measure of common sense. Perhaps not so far away when we come to the second beginning of thermodynamics, because sometimes it seems that the development of some people, and entire states, has already gone reversed, that is, from complex to primitive.